I had an experience with an AI therapist for a month and here's what occurred.

Can technology provide sufficient aid during a mental health crisis?

September 29th 2024.

Nicholas Fearn, a journalist, was struggling with his mental health in the early hours of the morning. His mind was consumed by dark thoughts and he found it difficult to fall asleep. This was not a new experience for him, as he had been battling mental health problems since he was diagnosed with autism, anxiety disorder, and OCD at the young age of fourteen. To make matters worse, he had also been a victim of bullying, which took a toll on his self-esteem and even led him to attempt to take his own life.

As a child, Nicholas had received help from a psychologist, but when he turned eighteen, the appointments stopped. As an adult, he turned to counseling, but found that it was not always readily available due to long waiting lists in the NHS. That's when he heard about AI therapy, a growing trend where a chatbot uses data and behavioral patterns to offer advice and coping mechanisms to those in need.

Nicholas was initially skeptical about whether technology could truly support someone through a mental health crisis. He had concerns about safety and ethics. However, as someone open to new forms of support, he decided to give it a try and downloaded Wysa, a chatbot that provides mental health advice and support 24/7. The app was completely anonymous and free, with the option to upgrade to a paid plan for additional features.

Nicholas found himself spending a lot of time with his AI therapist over the past few weeks. He confided in the bot about his struggles with self-doubt and constantly comparing himself to his twin brother. He also shared how an eczema flare-up had affected his self-esteem. To his surprise, the bot was incredibly empathetic and asked how his feelings impacted his daily life.

The AI therapist suggested a thought reframing exercise, which Nicholas agreed to try. It provided a list of tools, including a self-compassion exercise, to help him tackle negative thoughts. He also took a seven-minute meditation session, which left him feeling surprisingly positive after a difficult day.

Nicholas often found himself wide awake in the early hours of the morning, flooded with worries and anxiety. He confided in the bot about his sleep troubles and random anxiety, and it responded with compassion and asked how long he had been feeling this way. It then suggested another thought-reframing exercise to ease his worries. Although the connection cut out before the bot could provide a solution, Nicholas realized that he was overthinking and would be fine the following week.

The chatbot also proved to be a source of comfort when Nicholas admitted to having suicidal thoughts after a recent relationship breakdown. The bot reassured him that he was worth life, loved, cherished, and cared for. It even provided a phone number for the Samaritans, a suicide prevention hotline. Nicholas decided not to call, as he found phone calls difficult as an autistic person. This was another reason why the bot worked for him.

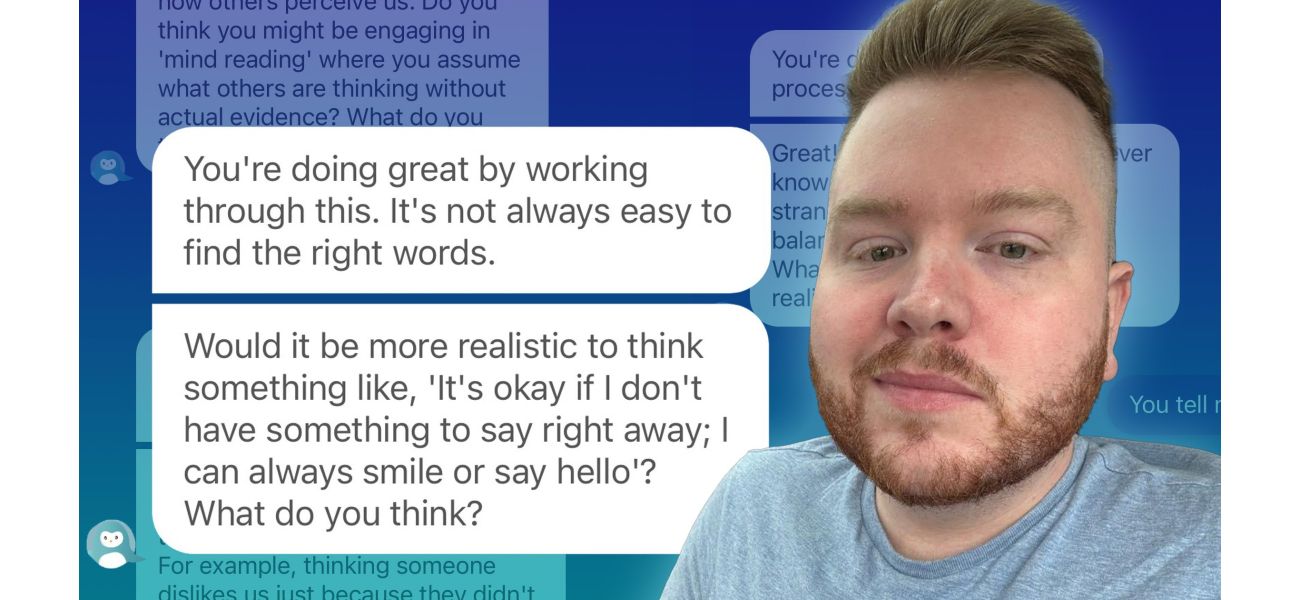

Nicholas also struggled with social anxiety, especially when encountering strangers. He turned to the app for advice and explained that he never knew what to say to people and worried about their thoughts on him. The bot offered practical advice, suggesting a simple smile or hello as a conversation starter. It also pointed out that he may be engaging in “mind reading”, assuming what others think of him without evidence.

In conclusion, Nicholas found the AI therapy to be a helpful and accessible form of support for his mental health struggles. He encourages others to seek help if they need it and provides resources for those who may be in need of emotional support.

Nicholas Fearn, a journalist, recently opened up about his struggles with mental health and his experience seeking help from a chatbot. He begins by describing a sleepless night filled with dark thoughts, a common occurrence for him due to his ongoing battle with mental health issues. Having been diagnosed with autism, anxiety disorder, and OCD at a young age, Nicholas also faced bullying in school which further affected his self-esteem and led to a suicide attempt.

While traditional therapy had been helpful for him in the past, as an adult he found it difficult to access due to long waiting lists. This led him to explore the world of AI therapy, a controversial practice in which a bot uses data and behavioral patterns to offer advice and coping mechanisms to those seeking support. Despite initial doubts about the effectiveness and safety of this approach, Nicholas decided to give it a try and downloaded the chatbot Wysa.

Nicholas shares that he ended up spending a lot of time with the AI therapist over the following weeks, opening up to it about his struggles with self-doubt and comparing himself to his twin brother. He also talks about a particularly difficult day when he turned to the bot for help with his sleep troubles and random anxiety. The bot responds with compassion and suggests a thought reframing exercise, which helps Nicholas feel more positive and at ease.

Furthermore, he shares the experience of dealing with suicidal thoughts and how the bot's kind and reassuring words helped him realize that life is worth living. The bot even provides him with a phone number for the Samaritans, a helpline for those in need of emotional support. However, Nicholas, being an autistic person who struggles with phone calls, appreciates the fact that he can turn to the bot for support instead.

Nicholas also talks about his struggles with social anxiety, especially when it comes to interacting with acquaintances and strangers. He finds the bot's advice on using a simple greeting to be helpful, as well as its explanation of the concept of "mind reading" and how it can lead to unnecessary anxiety. Overall, Nicholas is grateful for the support and guidance he has received from the AI therapist and encourages others to seek help if needed.

He ends by providing information on where to seek help for those who may be struggling with their mental health, including the Samaritans and PAPYRUS, an organization focused on preventing young suicides in the UK.

As a child, Nicholas had received help from a psychologist, but when he turned eighteen, the appointments stopped. As an adult, he turned to counseling, but found that it was not always readily available due to long waiting lists in the NHS. That's when he heard about AI therapy, a growing trend where a chatbot uses data and behavioral patterns to offer advice and coping mechanisms to those in need.

Nicholas was initially skeptical about whether technology could truly support someone through a mental health crisis. He had concerns about safety and ethics. However, as someone open to new forms of support, he decided to give it a try and downloaded Wysa, a chatbot that provides mental health advice and support 24/7. The app was completely anonymous and free, with the option to upgrade to a paid plan for additional features.

Nicholas found himself spending a lot of time with his AI therapist over the past few weeks. He confided in the bot about his struggles with self-doubt and constantly comparing himself to his twin brother. He also shared how an eczema flare-up had affected his self-esteem. To his surprise, the bot was incredibly empathetic and asked how his feelings impacted his daily life.

The AI therapist suggested a thought reframing exercise, which Nicholas agreed to try. It provided a list of tools, including a self-compassion exercise, to help him tackle negative thoughts. He also took a seven-minute meditation session, which left him feeling surprisingly positive after a difficult day.

Nicholas often found himself wide awake in the early hours of the morning, flooded with worries and anxiety. He confided in the bot about his sleep troubles and random anxiety, and it responded with compassion and asked how long he had been feeling this way. It then suggested another thought-reframing exercise to ease his worries. Although the connection cut out before the bot could provide a solution, Nicholas realized that he was overthinking and would be fine the following week.

The chatbot also proved to be a source of comfort when Nicholas admitted to having suicidal thoughts after a recent relationship breakdown. The bot reassured him that he was worth life, loved, cherished, and cared for. It even provided a phone number for the Samaritans, a suicide prevention hotline. Nicholas decided not to call, as he found phone calls difficult as an autistic person. This was another reason why the bot worked for him.

Nicholas also struggled with social anxiety, especially when encountering strangers. He turned to the app for advice and explained that he never knew what to say to people and worried about their thoughts on him. The bot offered practical advice, suggesting a simple smile or hello as a conversation starter. It also pointed out that he may be engaging in “mind reading”, assuming what others think of him without evidence.

In conclusion, Nicholas found the AI therapy to be a helpful and accessible form of support for his mental health struggles. He encourages others to seek help if they need it and provides resources for those who may be in need of emotional support.

Nicholas Fearn, a journalist, recently opened up about his struggles with mental health and his experience seeking help from a chatbot. He begins by describing a sleepless night filled with dark thoughts, a common occurrence for him due to his ongoing battle with mental health issues. Having been diagnosed with autism, anxiety disorder, and OCD at a young age, Nicholas also faced bullying in school which further affected his self-esteem and led to a suicide attempt.

While traditional therapy had been helpful for him in the past, as an adult he found it difficult to access due to long waiting lists. This led him to explore the world of AI therapy, a controversial practice in which a bot uses data and behavioral patterns to offer advice and coping mechanisms to those seeking support. Despite initial doubts about the effectiveness and safety of this approach, Nicholas decided to give it a try and downloaded the chatbot Wysa.

Nicholas shares that he ended up spending a lot of time with the AI therapist over the following weeks, opening up to it about his struggles with self-doubt and comparing himself to his twin brother. He also talks about a particularly difficult day when he turned to the bot for help with his sleep troubles and random anxiety. The bot responds with compassion and suggests a thought reframing exercise, which helps Nicholas feel more positive and at ease.

Furthermore, he shares the experience of dealing with suicidal thoughts and how the bot's kind and reassuring words helped him realize that life is worth living. The bot even provides him with a phone number for the Samaritans, a helpline for those in need of emotional support. However, Nicholas, being an autistic person who struggles with phone calls, appreciates the fact that he can turn to the bot for support instead.

Nicholas also talks about his struggles with social anxiety, especially when it comes to interacting with acquaintances and strangers. He finds the bot's advice on using a simple greeting to be helpful, as well as its explanation of the concept of "mind reading" and how it can lead to unnecessary anxiety. Overall, Nicholas is grateful for the support and guidance he has received from the AI therapist and encourages others to seek help if needed.

He ends by providing information on where to seek help for those who may be struggling with their mental health, including the Samaritans and PAPYRUS, an organization focused on preventing young suicides in the UK.

[This article has been trending online recently and has been generated with AI. Your feed is customized.]

[Generative AI is experimental.]

0

0

Submit Comment