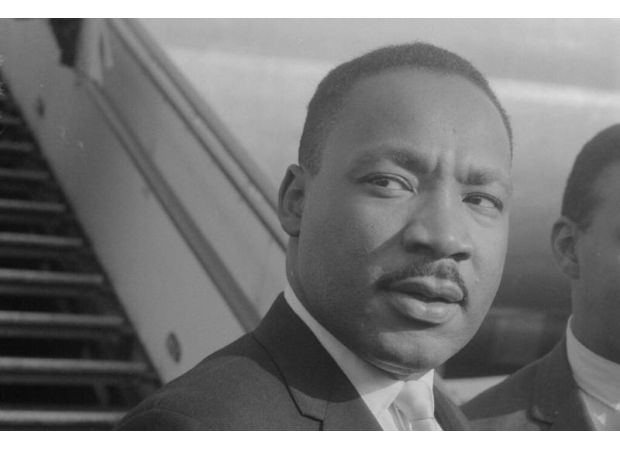

OpenAI will not let you use AI to create fake videos of Martin Luther King Jr. after his family expressed concerns.

Generative AI's novelty may come at a high cost, potentially risking the health and lives of marginalized communities.

According to a previous report by BLACK ENTERPRISE, Bernice King, daughter of the late civil rights leader Dr. Martin Luther King Jr., recently joined forces with Zelda Williams, daughter of the late comedian Robin Williams, to speak out against the use of OpenAI's Sora 2 tool to create inappropriate videos of their fathers. They made it clear that neither of their fathers would have given consent for such use if they were still alive.

As CNN reported, this public outcry led to conversations between Bernice King and OpenAI, as well as with John Hope Bryant and the AI Ethics Council. And as a result, on October 16, OpenAI released a statement announcing that they would allow authorized representatives or estate owners to request the removal of their likeness from the Sora 2 database.

The joint statement from OpenAI and King Estate, Inc. acknowledged that there are free speech interests at play when it comes to depicting historical figures, but ultimately, public figures and their families should have control over how their likeness is used. This decision was made after extended dialogue with the AI Ethics Council, but it does raise questions about the ethics of the tech industry as a whole.

OpenAI's decision to launch Sora 2 without proper guardrails in place for the use of deceased individuals' likenesses is just one example of how the tech industry often neglects to consider ethical implications in their pursuit of innovation. This includes using finite environmental resources, training datasets without consent, and building data centers that put a strain on power grids without the consent of those who foot the bill for increased utility costs.

Experts have also raised concerns about the potential for Sora 2's realistic content to be used for media manipulation on a large scale. As Lance Ulanoff pointed out in a Tech Radar op-ed, OpenAI has a pattern of rolling out new features without considering the potential consequences, and then scrambling to fix them after they have caused harm.

While OpenAI's decision to restrict the use of deceased public figures' likenesses in Sora 2 is a step in the right direction, it does not address the underlying issues with the technology's business model and lack of ethical framework. And as Vice noted, even with the added watermark, there are still concerns about misinformation spreading among a population with a history of struggling with media literacy.

It is important to hold companies like OpenAI accountable for the ethical implications of their technology, especially when it comes to marginalized communities. The recent NAACP challenge to Elon Musk's xAI facility in Memphis highlights the potential health risks to Black residents and the need for a more comprehensive ethical framework in the tech industry. While we can appreciate OpenAI's efforts to address the issue at hand, there is still much work to be done to ensure that new technologies are developed and used ethically.

13 Views