Data, Transparency, and Regulation

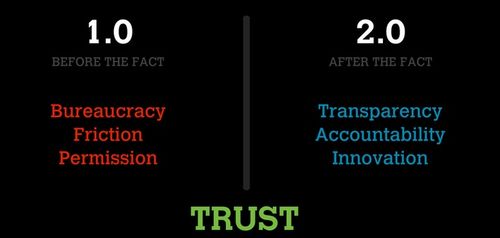

Last month, I pointed to a talk that Nick Grossman gave at Princeton where he laid out the principals of Regulation 2.0. This slide is from that talk.

Regulation 2.0 is a framework that we have been working on with a bunch of others who are rethinking what government means in a networked world. In the Regulation 1.0 world (the one we are in now) regulators are required to give you permission to do things. In a Regulation 2.0 world, as long as you report openly and transparently about what you are doing to the government and everyone else, you are free to innovate and operate. But you are accountable to live up to the rules that are set by the regulators and the data you report about your actions will be measured against those rules.

I thought about all of that when I read about Mayor Bloomberg's team of data crunchers in today's New York Times. This group, which is described as "the half-dozen post-collegiate techies", operate with a budget of less than $1mm a year and yet have been able to solve many tricky problems for city hall in the past three years.

This story is a good example:

Last fall, the city’s Department of Environmental Protection wanted, finally, to crack down on restaurants that were illegally dumping cooking oil into sewers in their neighborhoods

The antiquated answer would have been to have the health department send inspectors to restaurants on blocks with backed-up sewers and hope by chance to catch a busboy pouring the contents of a deep fryer into the street.

number-crunchers working from a pair of cluttered cubicles across from City Hall in the Municipal Building dug up data from the Business Integrity Commission, an obscure city agency that among other tasks certifies that all local restaurants have a carting service to haul away their grease. With a few quick calculations, comparing restaurants that did not have a carter with geo-spatial data on the sewers, the team was able to hand inspectors a list of statistically likely suspects.

The result: a 95 percent success rate in tracking down the dumpers. With nothing grander than public data, the Case of the Grease-Clogged Sewers was solved.

This story reminds me so much of the story Steven Johnson told in the Ghost Map and many of the stories he tells in his current book, Future Perfect. It should not be a surprise that Steven is one of the folks who have been working with us on this Regulation 2.0 framework.

As more and more of the data about what goes on in our world becomes available via network organizing structures, we will be able to regulate much more lightly, thus lowering the cost, and burden, of government and allowing innovation to prosper.

New York City, under Mayor Bloomberg, has been leading the way in using data and technology to locate and address problems in an efficient manner. But there is so much more that can be done in this direction. I hope that his efforts are adopted and evolved by his successor and all governments in the coming years.